Likewise, in January of this year, New York Times columnist Thomas Friedman told a gathering of the Professional Convention Management Association:

Never have more people had available to them cheap tools of innovation. […] So, oh my goodness, we are going to see an explosion of innovation that will be creative destruction on steroids. There will be so many new things and the old is going to go out faster than ever. […] The usefulness of what you know today will become obsolete faster due to how fast the pace of change is accelerating.

More broadly, a large survey of experts by the Pew Research Center finds that the “new normal” in the coming years will be “far more tech-driven,” with respondents using words like “inflection point,” “punctuated equilibrium,” “unthinkable scale,” “exponential process,” “massive disruption,” and “unprecedented challenge.” At its “annual flagship event on technology strategies” last fall, MIT Technology Review organized its discussion around the proposition that “our world has hit an inflection point.”

Such hype is nothing new. As MIT economists Erik Brynjolfsson and Andrew McAfee argued in their 2014 book, The Second Machine Age:

Self-driving cars, Jeopardy! champion supercomputers, and a variety of useful robots have […] contribute[d] to the impression that we’re at an inflection point. […] The digital progress we’ve seen recently is […] just a small indication of what’s to come, [because] […] the nature of technological progress in the era of digital hardware, software, and networks […] is exponential, digital, and combinatorial. [Emphasis theirs.]

Sounding a similar note in a 2018 keynote on technological “accelerations,” Friedman himself averred that the year 2007 “may be understood in time as one of the greatest technological inflection points” of the modern era. Around that time, Apple unveiled the iPhone; Google bought YouTube and introduced the Android mobile operating system; IBM launched Watson; Amazon released the Kindle; Satoshi Nakamoto conceived of Bitcoin; Airbnb was founded; and Netflix began its video-streaming service.

Conjuring the image of an “inflection point” is a favored device among those who want us to believe that we are in the midst of a world-historic transformation. But there is a glaring problem with such arguments: they all rely on an incorrect meaning of the central term. An actual inflection point, in mathematics, refers to the place on a curve where the value changes between positive and negative, concave or convex (or vice versa).

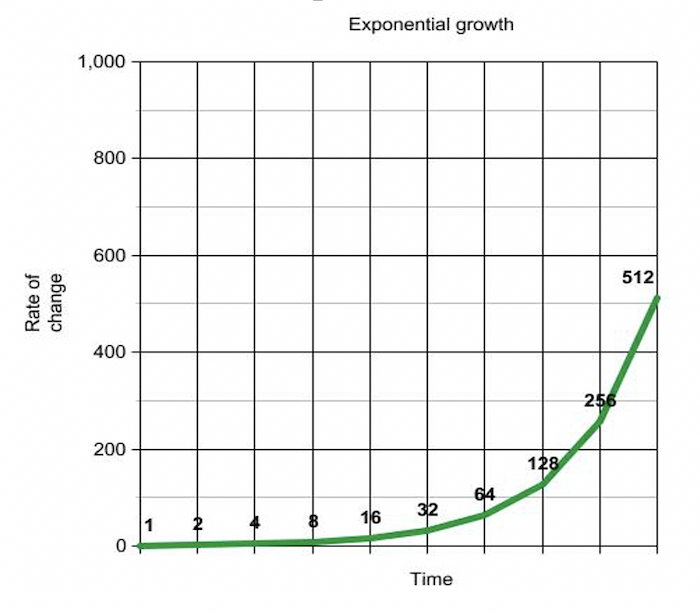

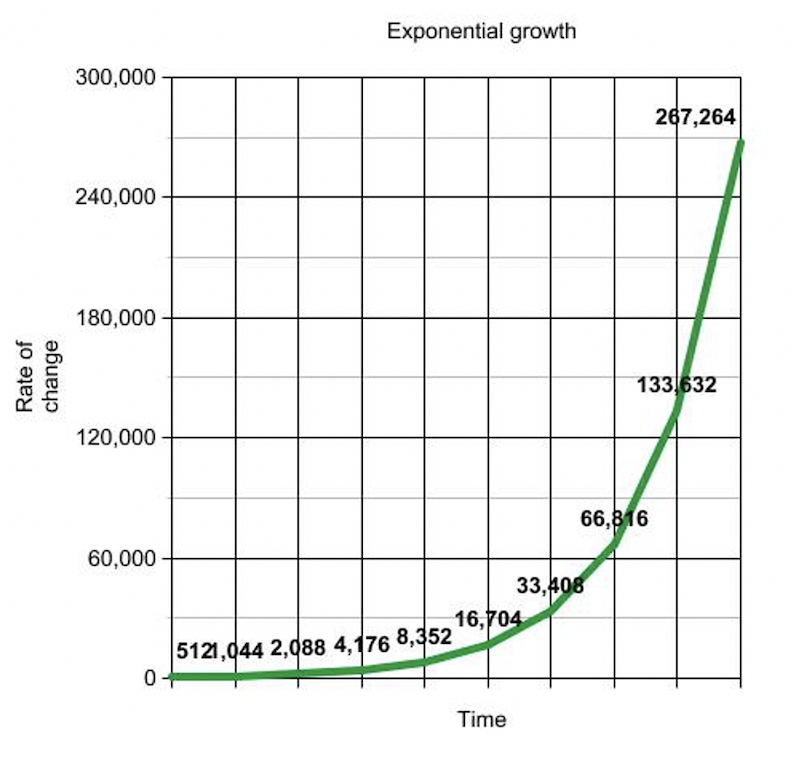

This is no mere technical distinction. An inflection point is an objective feature of a trend line, which means that one’s perception of it will not change when one zooms in or out on the graph. By contrast, what Friedman, Brynjolfsson, and McAfee describe as an “inflection point” is wholly subjective. The “explosions” of innovation they perceive are purely impressionistic. In a graph depicting exponential growth, one can designate essentially any point as explosive.

Is the “explosion” at value 128? …

Or is it at value 33,408?

Viewed in this light, we can see that the term is being used to convey emphasis rather than substance. A true inflection point would show a downward-sloping curve bending up.

Faced with this problem, the inflection pointillists would offer a backstop: the “bend in the knee,” or as Brynjolfsson and McAfee put it, “a bend in the curve where many technologies that used to be found only in science fiction are becoming everyday reality.”

But this device suffers from the same problem. Two observers looking at the same curve could each designate a different “knee,” and neither would be wrong. But nor would either have any idea where on the curve the next truly consequential breakthrough may lie. One person’s “slow-growth phase” may be another’s “inflection point,” and yet another’s “rapid-growth phase.” In Brynjolfsson and McAfee’s case, the central contention is that we have reached “a point where the curve starts to bend a lot — because of computers.” But whereas “bend” is an objective property of the curve, “a lot” is a subjective judgment that assumes a particular perspective.

To Infinity, and Nowhere

Underpinning this broader narrative of technological progress through digitalization is the logic of Moore’s Law. In a 1965 paper, Intel co-founder Gordon Moore observed that the number of transistors on an integrated circuit tended to double about every two years. For decades, this rate of growth was borne out (though it obviously cannot continue forever), leading the techno-utopian guru Ray Kurzweil to develop his own Law of Accelerating Returns: “The rate of change in a wide variety of evolutionary systems (including but not limited to the growth of technologies) tends to increase exponentially.”

It is based on this assumption that the term “exponential” has come to be wildly overused. For those hyping a given technology, the presence of exponentiality is presented as a sufficient condition for making any achievement seem imminent, whether it be curing cancer, colonizing Mars, or solving climate change with a yet-to-be-invented technology.

Hence, in Infinite Progress, the entrepreneur Byron Reese (who is not a physician) boasts that, “Armed with the data to develop medical knowledge and wisdom, and the technological tools to enable medical progress at an ever-quickening pace, we can confidently foresee a day when humanity will overcome” all diseases, even cancer. Writing in 2013, Reese concluded that, “We will do much more in the next twenty years than in the preceding one hundred. After that, more in five years than those twenty. Then more in one year than those five. Given all this, do you really believe disease has a chance?”

The problem, of course, is that even if one accepts that technological progress against something like cancer is accelerating, that doesn’t mean “the cure” is within sight. The argument assumes that we already know what it would take to cure cancer, even though we will not have that knowledge until we’ve already done it. Short of that information, claims about exponential technological progress aren’t nearly as meaningful as they sound. When Moore devised his “law,” he simply had to compare the number of transistors in an integrated circuit two years ago with the number in today’s circuits, then extrapolate from there. But knowing the pace of travel doesn’t tell you when you will reach a destination whose distance from you is unknown.

Moreover, even if human biology were to be converted into digital information processing — as Kurzweil believes — it doesn’t follow that cataloging such data would lead to a complete understanding of complex biological phenomena. After all, we already have more socioeconomic data than any other society in history, yet economists still consistently fail to foresee looming meltdowns, nor can they even agree on the answers to basic, seemingly empirical questions, such as the effect of a particular tax policy on GDP growth.

The reification of data is, arguably, the defining feature of the information age. Entire industries rest on the assumption that more data — and more data-analytic power — will yield ever-more fruitful results. But the flaws in such thinking have long been apparent. As the cultural critic Neil Postman argued 30 years ago, the “accumulation of reliable information about nature,” society, and the “human soul” has not automatically ushered in a better world. Just as there is the “problem of information scarcity,” so too are there “dangers of information glut” — a problem that should be familiar to anyone who has tried to make sense of the world through Facebook or Twitter.

But even when we have wrestled information into control (through digitalization), even when we have squeezed it for insights (with AI), it is a mistake to conflate the resulting information with knowledge, let alone understanding. As Postman warned, the exponential growth of information can lead us down rabbit holes without actually telling us what we need to know. “Is it lack of information about how to grow food that keeps millions at starvation levels?” he asks. “Is it lack of information that brings soaring crime rates and physical decay to our cities? Is it lack of information that leads to high divorce rates and keeps the beds of mental institutions filled to overflowing?”

In the world described by Kurzweil’s Law of Accelerating Returns, the present will always be an exciting time to be alive (and tomorrow will always be even more exciting than today). The disappointment doesn’t come until we pause and reflect on the past. That is when we can see that many previous “explosions” were not as consequential as they had been chalked up to be. At the end of the day, enthusiastic declarations of “inflection points” are no more than intimations of the speaker’s own excitement. The new and novel will always seem special, particularly when we have been primed routinely to expect exponential improvements and imminent breakthroughs like the mRNA vaccines against COVID-19.

In looking ahead, we should recognize that this trust in progress is an expression of blind faith, one that ignores the nature of the problems technology is expected to solve. In the case of the pandemic, humanity, with its exponentially advancing technology, was pitted against an adversary that also had recourse to the power of exponentiality.

The countries that performed worst in the pre-vaccine months were those that failed to grasp the implications of exponential contagion. In a recent book on Britain’s fiasco of a response to the crisis, Jonathan Calvert and George Arbuthnott show how Prime Minister Boris Johnson’s decision to eschew lockdowns when new infections were “only” in the thousands set the stage for Britain to become what the New York Times later deemed “Plague Island.” Though we were spared by the rapid arrival of vaccines this time, that doesn’t mean we will prevail in future races between exponential technological progress and some future exponential-growth scourge, be it biological or climatic.

Since we have no idea when — or if — a breakthrough will come, we ought not wager our future on subjective visions of exponential technological progress and steeper bends in the knee. More to the point, if we really are entering a “Great Acceleration,” we would do well to pause and consider whither and to what ends we are traveling.

¤

Nicholas Agar is the author of How to Be Human in the Digital Economy (MIT Press). Follow him on Twitter at @AgarNicholas.

Stuart Whatley is a senior editor at Project Syndicate. Follow him on Twitter at @StuartWhatley.

¤

Featured image: "Inflection point" by Wolfgang Dvorak is licensed under CC BY-SA 3.0. Image has been cropped and desaturated.

Banner image: "Moore's Law Transistor Count 1970-2020" by Max Roser and Hannah Ritchie is licensed under CC BY 4.0. Image has been cropped and the color changed.

LARB Contributors

LARB Staff Recommendations

Fraud by Numbers: Metrics and the New Academic Misconduct

UCLA professor of Law and Communication Mario Biagioli dissects how metric-based evaluations are shaping university agendas.

Civilization and Its Stuff: On Kyle Chayka’s “The Longing for Less: Living with Minimalism”

Stuart Whatley considers “The Longing for Less” by Kyle Chayka.

Did you know LARB is a reader-supported nonprofit?

LARB publishes daily without a paywall as part of our mission to make rigorous, incisive, and engaging writing on every aspect of literature, culture, and the arts freely accessible to the public. Help us continue this work with your tax-deductible donation today!

:quality(75)/https%3A%2F%2Fdev.lareviewofbooks.org%2Fwp-content%2Fuploads%2F2021%2F06%2FAgarWhatleyInlectionpointilliists.png)